The Debate on limitation of Lethal Autonomous weapons has hotted up, especially in the the light of the Government’s new Defence AI sttategy.

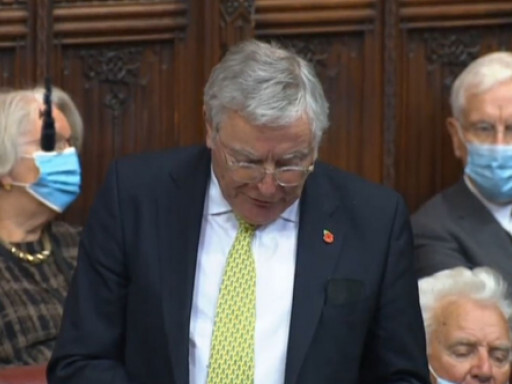

This is what I said prior to the report being published last when the Armed Forces Bill went thnrough the House of Lords

We eagerly await the defence AI strategy coming down the track but, as the noble Lord said, the very real fear is that autonomous weapons will undermine the international laws of war, and the noble and gallant Lord made clear the dangers of that. In consequence, a great number of questions arise about liability and accountability, particularly in criminal law. Such questions are important enough in civil society, and we have an AI governance White Paper coming down the track, but in military operations it will be crucial that they are answered.

From the recent exchange that the Minister had with the House on 1 November during an Oral Question that I asked about the Government’s position on the control of lethal autonomous weapons, I believe that the amendment is required more than ever. The Minister, having said:

“The UK and our partners are unconvinced by the calls for a further binding instrument”

to limit lethal autonomous weapons, said further:

“At this time, the UK believes that it is actually more important to understand the characteristics of systems with autonomy that would or would not enable them to be used in compliance with”

international human rights law,

“using this to set our potential norms of use and positive obligations.”

That seems to me to be a direct invitation to pass this amendment. Any review of this kind should be conducted in the light of day, as we suggest in the amendment, in a fully accountable manner.

However, later in the same short debate, as noted by the noble Lord, Lord Browne, the Minister reassured us, as my noble friend Lady Smith of Newnham noted in Committee, that:

“UK Armed Forces do not use systems that employ lethal force without context-appropriate human involvement.”

Later, the Minister said:

“It is not possible to transfer accountability to a machine. Human responsibility for the use of a system to achieve an effect cannot be removed, irrespective of the level of autonomy in that system or the use of enabling technologies such as AI.”—[Official Report, 1/11/21; col. 994-95.]

The question is there. Does that mean that there will always be a human in the loop and there will never be a fully autonomous weapon deployed? If the legal duties are to remain the same for our Armed Forces, these weapons must surely at all times remain under human control and there will never be autonomous deployment.

However, that has recently directly been contradicted. The noble Lord, Lord Browne has described the rather chilling Times podcast interview with General Sir Richard Barrons, the former Commander Joint Forces Command. He contrasted the military role of what he called “soft-body humans”—I must admit, a phrase I had not encountered before—with that of autonomous weapons, and confirmed that weapons can now apply lethal force without any human intervention. He said that we cannot afford not to invest in these weapons. New technologies are changing how military operations are conducted. As we know, autonomous drone warfare is already a fact of life: Turkish autonomous drones have been deployed in Libya. Why are we not facing up to that in this Bill?

I sometimes get the feeling that the Minister believes that, if only we read our briefs from the MoD diligently enough and listened hard enough, we would accept what she is telling us about the Government’s position on lethal autonomous weapons. But there are fundamental questions at stake here which remain as yet unanswered. A review of the kind suggested in this amendment would be instrumental in answering them.

27th November 2021

Peers Advocate the Value of Music Therapy for Dementia

11th November 2021

Government refuses to rule out development of lethal autonomous weapons

31st January 2016

Lord C-J calls for Action on Creative Skills

18th July 2015