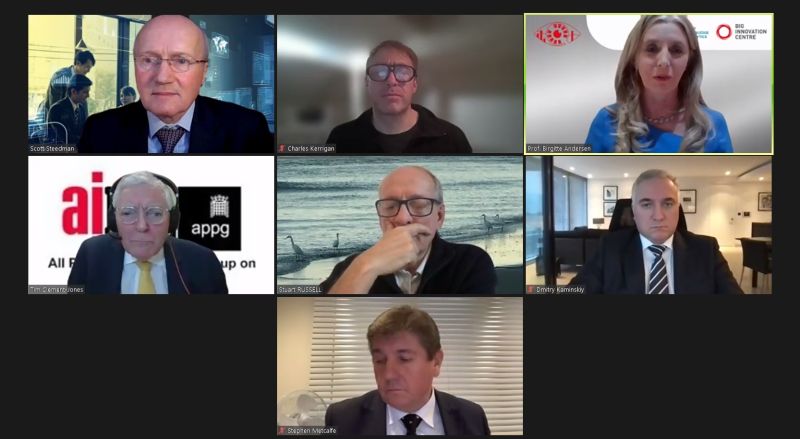

It was good to launch the new Artificial Intelligence Industry in the the UK Landscape Overview 2021: Companies, Investors, Influencers and Trends with the authors from Deep Knowledge Analytica nd Big Innovation Centre and my APPG AI Co Chair Stephen Metcalfe MP, Professor Stuart Risssell the Reith lecturer , Charles Kerrigan of CMS and Dr Scott Steedman of the BSI

Here is the full report online

https://mindmaps.innovationeye.com/reports/ai-in-uk

And here is what I said about AI Regulation at the launch:

A little under 5 years ago we started work on the AI Select Committee enquiry that led to our Report AI in the UK: Ready Willing and Able? The Hall/Pesenti Review of 2017 came at around the same time.

Since then many great institutions have played a positive role in the development of ethical AI. Some are newish like the Centre for Data Ethics and Innovation, the AI Council and the Office for AI; others are established regulators such as the ICO, Ofcom, the Financial Conduct Authority and the CMA whichhave put together a new Digital Regulators Cooperation Forum to pool expertise in this field. This role includes sandboxing and input from a variety of expert institutes on areas such as risk assessment, audit data trusts and standards such as the Turing, Open Data, the Ada Lovelace, the OII and the British Standards Institute. Our Intellectual Property Office too is currently grappling with issues relating to IP created by AI .

The publication of National AI strategy this Autumn is a good time to take stock of where we are heading on regulation. We need to be clear above all , as organisations such as techUK are, that regulation is not the enemy of innovation, it can in fact be the stimulus and be the key to gaining and retaining public trust around AI and its adoption so we can realise the benefits and minimise the risks.

I have personally just completed a very intense examination of the Government’s proposals on online safety where many of the concerns derive from the power of the algorithm in targetting messages and amplifying them. The essence of our recommendations revolves around safety by design and risk assessment.

As is evident from the work internationally by the Council of Europe, the OECD, UNESCO, the Global Partnership on AI and the EU with its proposal for an AI Act, in the UK we need to move forward with proposals for a risk based regulatory framework which I hope will be contained in the forthcoming AI Governance White Paper.

Some of the signs are good. The National AI strategy accepts the fact that we need to prepare for AGI and in the National Strategy too they talk too about

- public trust and the need for trustworthy AI,

- that Government should set an example,

- the need for international standards and an ecosystem of AI assurance tools

and in fact the Government have recently produced a set of Transparency Standards for AI in the public sector.

On the other hand

- Despite little appetite in the business or the research communities they are consulting on major changes to the GDPR post Brexit in particular the suggestion that we get rid of Article 22 which is the one bit of the GDPR dealing with a human in the loop and and not requiring firms to have a DPO or DPIAs

- Most recently after a year’s work by the Council of Europe’s Ad Hoc Committee on the elements of a legal framework on AI at the very last minute the Government put in a reservation saying they couldn’t yet support the document going to the Council because more gap analysis was needed despite extensive work on this in the feasibility study

- We also have no settled regulation for intrusive AI technology such as live facial recognition

- Above all It is not yet clear whether they are still wedded to sectoral regulation rather than horizontal

So I hope that when the White Paper does emerge that there is recognition that we need a considerable degree of convergence between ourselves the EU and members of the COE in particular, for the benefit of our developers and cross border business that recognizes that a risk based form of horizontal regulation is required which operationalizes the common ethical values we have all come to accept such as the OECD principles.

Above all this means agreeing on standards for risk and impact assessments alongside tools for audit and continuous monitoring for higher risk applications.That way I believe we can draw the US too into the fold as well.

This of course not to mention the whole Defence and Lethal Autonomous Systems space, the subject of Stuart Russell’s Second Reith Lecture which despite the promise of a Defence AI Strategy is another and much more depressing story!

5th April 2021

The UK’s Role In The Future Of AI

17th June 2017